Why Reactive Recruitment is Technical Debt

A critical engineer hands in their notice. Suddenly you're staring down an 8-week hiring process while a project deadline looms. You tell yourself you'll backfill quickly. You'll make do. The team will absorb the load temporarily.

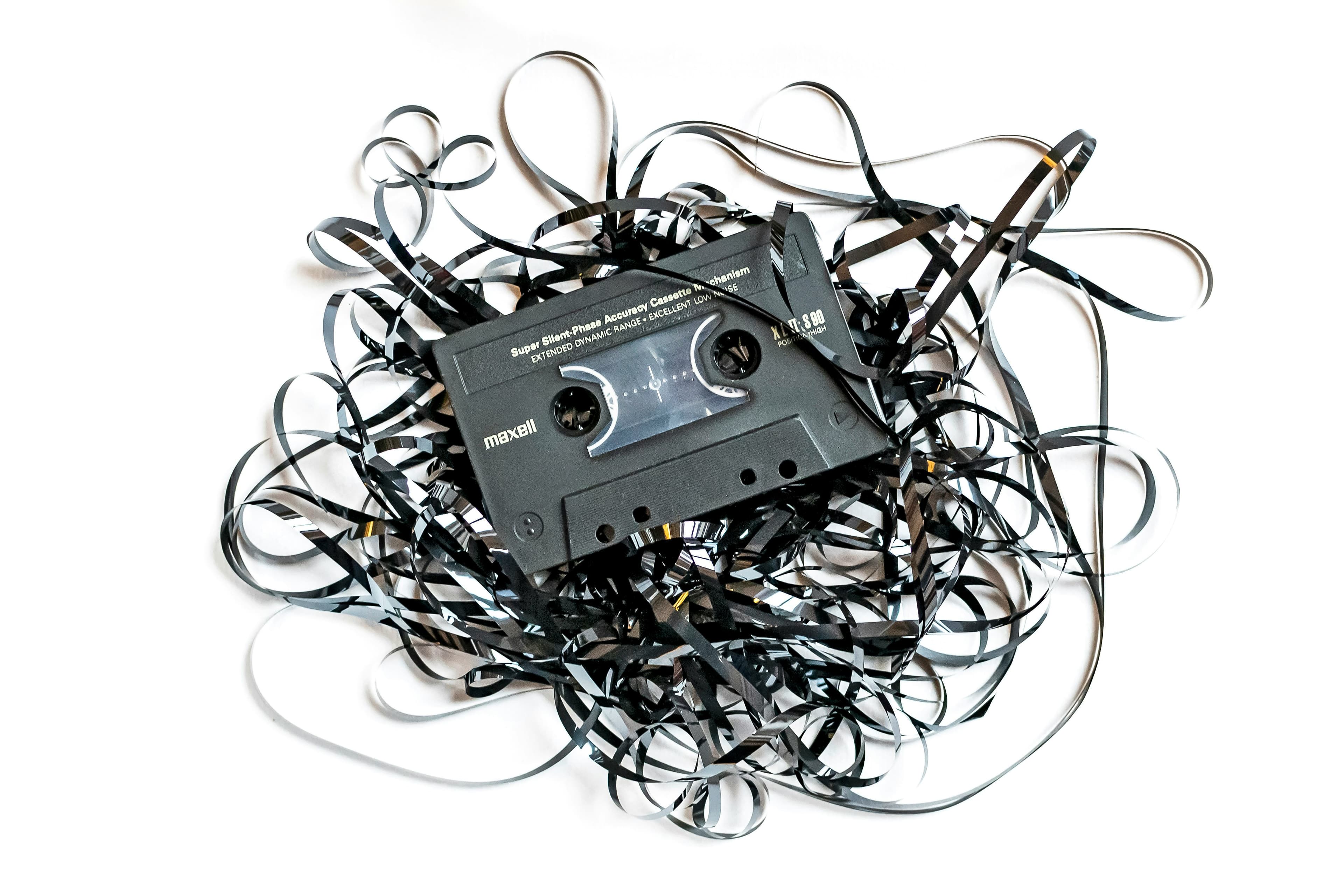

This is the resourcing equivalent of "we'll refactor later."

And like all technical debt, it compounds.

The Interest Rate on Empty Seats

Technical debt is a familiar concept in engineering. You take a shortcut today, knowing you'll pay for it tomorrow. With interest. The longer you leave it, the more expensive the fix becomes.

Reactive recruitment works the same way. The moment a role sits vacant, you start accruing costs that don't show up on a balance sheet but absolutely show up in your delivery capacity.

Week 1-2: The remaining team absorbs the workload. Velocity holds, but only because people are working longer hours. You tell yourself it's temporary.

Week 3-4: Job ads are live, but quality candidates take time to surface. Meanwhile, the backlog grows. Sprint commitments start slipping. Your best engineers are now context-switching between their own work and covering gaps.

Week 5-6: Interviews are underway, but your first-choice candidate has three other offers. You're competing against organisations that moved faster. The team is visibly tired. Someone mentions they've been approached by a recruiter.

Week 7-8: You extend an offer. They need to serve four weeks' notice. The project is now officially late. You've lost two months of momentum, and your team has been running hot the entire time.

This isn't a hypothetical. It's the default experience for most NZ infrastructure teams.

The Hidden Line Items

When teams run a post-mortem on a delayed project, they'll identify technical causes. An underestimated migration, an unexpected dependency, a production incident. What rarely makes the list is the three-month period where they were down a senior engineer and everyone was stretched thin.

But the costs are real.

Velocity loss. If a team of five loses one engineer for eight weeks, that's roughly 20% of your delivery capacity gone. Not reduced. Gone. The remaining four aren't 25% more productive to compensate.

Knowledge drain. The departing engineer takes context with them. The new hire, when they eventually start, needs 2-3 months to reach full productivity. Your effective gap isn't eight weeks. It's closer to five months of impaired output.

Burnout tax. Engineers covering for absent colleagues aren't just working more hours. They're making more mistakes, producing lower-quality code, and updating their LinkedIn profiles. One resignation often triggers another.

Opportunity cost. Every week spent in survival mode is a week you're not shipping new features, improving reliability, or reducing your own technical debt. The backlog doesn't pause while you recruit.

Add these up, and a single unplanned vacancy can cost a quarter's worth of progress. Yet most teams have no forward visibility on their capacity and no contingency when gaps appear.

Why "Hire When We Need To" Doesn't Work

You would never run a production workload without redundancy. You architect for failure. Multi-region deployments, auto-scaling groups, circuit breakers. You assume components will fail and design systems that degrade gracefully.

Yet the same leaders who insist on 99.99% uptime for their platforms accept single points of failure in their teams.

The assumption behind reactive recruitment is that talent is available on demand. That when you need a Senior Cloud Engineer, you can simply go to market and acquire one within a reasonable timeframe.

This assumption is false.

The NZ market for infrastructure talent is constrained. The engineers you want are employed, and they're not actively looking. The recruitment process. Job ads, screening, interviews, offers, notice periods. It has a minimum latency of 6-8 weeks, and that's when everything goes smoothly.

You wouldn't tolerate 6-8 weeks of latency in your incident response. Why accept it in your resourcing model?

From Reactive to Resilient

The alternative isn't to hire people you don't need. It's to build infrastructure around your talent pipeline the same way you build infrastructure around your systems.

Capacity planning. Know your current headcount, your projected workload, and the gap between them. Not just for this sprint, but for the next two quarters.

Warm pipelines. Maintain relationships with pre-vetted candidates who match your stack, even when you're not actively hiring. When a gap appears, you're not starting from zero.

Burst capacity. Have a mechanism to deploy skilled engineers within days, not weeks. This might mean a contractor bench, a managed services partner, or both. The point is eliminating the latency between "we need someone" and "someone is working."

Redundancy by design. No single person should be the only one who understands a critical system. Cross-training isn't a nice-to-have. It's your human failover.

None of this is radical. It's applying principles you already use in systems design to the problem of team capacity.

The Audit

Most teams don't know how exposed they are until it's too late.

Here's a quick test: If your most critical engineer resigned tomorrow, how long before a qualified replacement is productive in the role?

If the answer is "months," you have a single point of failure.